How Do Voice and Facial Expressions Represent Emotions Among Cultures?

Tech & Experience Design

How do you imagine or estimate someone’s emotions and feelings? Where do you usually look, listen, feel, and imagine (estimate) the emotions and psychology of others? When someone is talking in front of you, do you think they are happy? Angry? Frustrated? How do you see the emotions and psychology of others?

Facial expressions like eye contact, the way the corners of the mouth turn up, as well as hand and body movements, are visual cues. On the other hand, when someone is speaking, some people imagine the person’s emotions from the tone, volume, and intensity of their voice. These are estimations based on auditory cues. Of course, you may also estimate based on the content of the conversation, which would be an estimation based on context.

If the person is right in front of you, you would likely engage both visual (eyes) and auditory (ears) senses and make a comprehensive judgment, especially if you know the person’s personality. In phone calls or online meetings without a camera, you can only rely on the auditory information from the voice and the content of the conversation to make judgments.

In my previous column The Potential of Emotion in UX Design, I wrote about the classification of emotions and how to sense those emotions in a system. This time, I will discuss what cues are used to estimate others’ temporary emotions.

Understanding emotions

Temporary feelings, such as joy, anger, sadness, fear, and anxiety, are called emotions. In other words, emotion is considered a type of feeling. Some define emotions as feelings that others can observe and judge. To navigate social life successfully, decoding others’ expressions and psychology plays a big role. This is known as affective perception. Based on this affective perception, individuals need to act appropriately. Many of you may have experienced conflicts or issues in communication due to misinterpreting others’ emotions. It’s important to note that mood (generally continuous and subtle feeling) is distinguished from emotion.

Faces? Voices? How do we perceive emotions?

So, what do you observe or perceive in others to estimate their emotions?

The cognition of emotion has long been a topic of research and discussion in psychological and cognitive science communities. Particularly, the interpretation of emotions from facial expressions (facial affect recognition) has been extensively studied, and it was once believed that emotional recognition was universal. This suggests that regardless of culture or race, people use the same cues to recognize emotions. However, recent studies are suggesting that emotional recognition varies based on culture. It is proposed that the sources of information used to recognize others’ emotions differ based on where the individual lives, their upbringing, and their cultural background. The paper conducted experiments comparing emotional recognition differences between Japanese and Dutch individuals. Let me share the conclusion before we delve further into it:

Japanese tend to judge emotions based on voice, while Dutch tend to rely on facial expressions.

Any thoughts on this conclusion? I, as a Japanese, found it somewhat skeptical, thinking, “Don’t Japanese people use both voice and facial expressions to gauge others’ emotions?” Now let’s discuss this further.

Cross-cultural comparison of audiovisual emotional recognition (from the paper)

The paper Comparison of Audiovisual Emotional Recognition by Face and Voice (by Akihiro Tanaka, from J-STAGE) was presented at the 74th Annual Meeting of the Japanese Psychological Association in 2010.

The authors have conducted interesting experiments focusing on facial expressions and voice to explore cultural differences in emotional recognition.

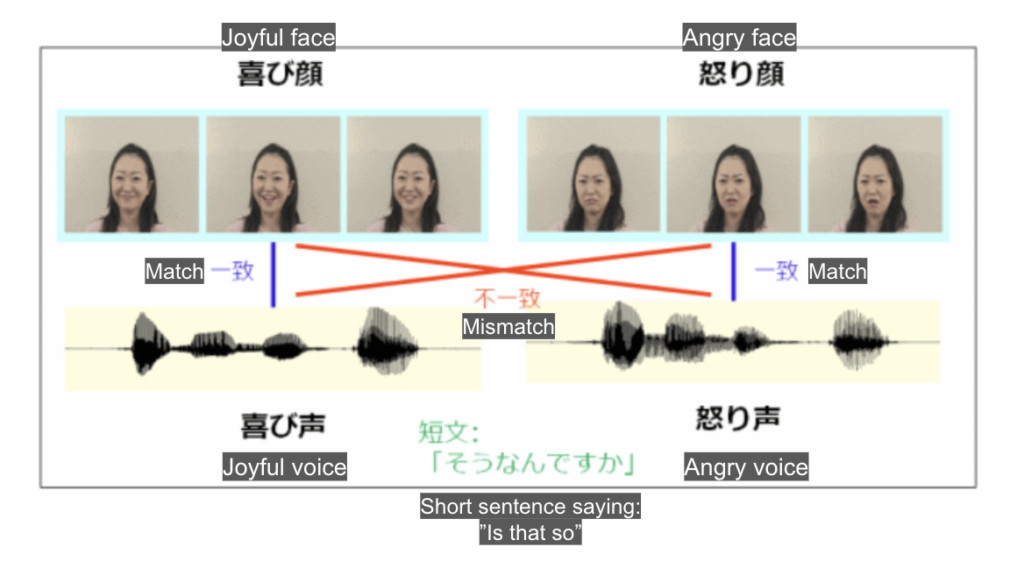

Japanese and Dutch participants were asked to read a neutral phrase, “Is that so?” in their native language, while expressing “joy” or “anger” through their facial expressions and voice. Videos were recorded showing:

- Matching emotional expressions (e.g., joyful face + joyful voice)

- Conflicting emotional expressions (e.g., joyful face + angry voice)

The recorded videos were edited into these two categories (adjusting for timing discrepancies between facial expressions and voice), and Japanese and Dutch students were asked to participate in the following tasks while watching these videos in both Japanese and Dutch:

- Facial Task: Ignore the voice and choose between joy or anger based on facial expressions only

- Voice Task: Ignore facial expressions and choose between joy or anger based on voice only

In this experimental task, participants were deliberately asked to estimate emotions by ignoring one of the cues, even though they could perceive both facial expressions and voice. The study aimed to evaluate how much participants’ emotional estimation was influenced by the information they were asked to ignore and whether there were differences between native and foreign language speakers.

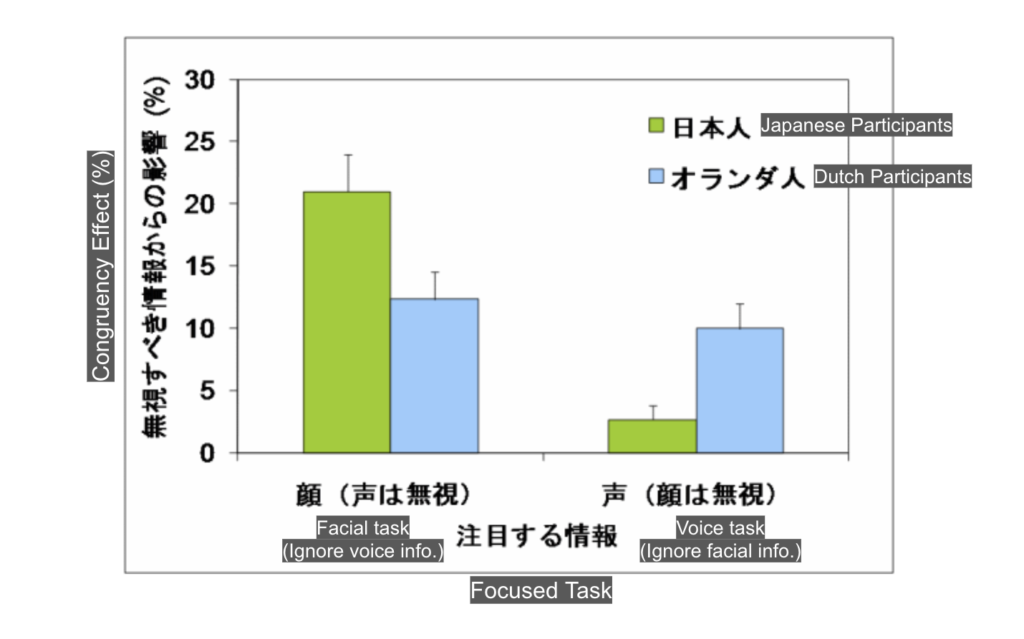

Results showed:

English translation by Spectrum Tokyo

- Japanese participants tend to be influenced by voice in the Facial Task (choosing emotions based on facial expressions only)

- Japanese participants are less influenced by facial expressions in the Voice Task (choosing emotions based on voice only)

- Dutch participants had high accuracy rates (97% or above) in the Facial Task under all conditions

- Japanese participants showed decreased accuracy rates in the Facial Task when faced with “joyful face + angry voice”

In conclusion according to the paper,

Japanese tend to be significantly influenced by voice when perceiving emotions in a conversation.

On the other hand, there are papers and studies suggesting that emotional recognition in Western cultures is heavily influenced by facial expressions and gestures. The authors also provide a supplementary explanation for the reason why Japanese showed decreased accuracy rates in the Facial Task when faced with ‘joyful face + angry voice’ – they suggest that the perception of anger in the voice, which should have been ignored, may have made the joyful face appear as a forced smile (a smile concealing negative emotions). This explanation might resonate with Japanese individuals.

Is the high level of Japanese voice actors linked to emotional perception in Japan?

From the previous paper, it was found that Japanese tend to perceive emotions from audio information. In other words, it can be said that Japan is a culture that highly values audio information. Since the experiments used neutral phrases, participants likely inferred emotions from aspects such as tone, intonation, rhythm, and voice quality. These elements that complement language information, such as tone and intonation, are also known as “paralanguage,” and it may be a characteristic of Japanese people to delicately capture these paralinguistic expressions.

When it comes to Japanese subculture, manga and anime are often highlighted, and voice actors are essential in these anime productions. While facial expressions and gestures are portrayed through the animation visuals, the voice actors are responsible for the audio, especially the paralanguage. I believe that Japanese voice actors who fulfill and convince the delicate Japanese audience with their expressions have a very high level of skill.

In 2021, the American film distribution company Universal Pictures posted a video clip of a scene from the movie “Gladiator” in German, Italian, English, Japanese, and French on Facebook, stating that the Japanese version is a must-listen (from Universal Studio DE’s Facebook page). The post received comments like “I don’t understand the meaning, but the Japanese version conveys strength.” Of course, there were various opinions, such as Italian being good or English being the most fitting. If you’re interested, take a look at the comments on the Facebook post.

Communication with robots (AI agents) and emotions

Voice actors express human voices, including paralanguage. On the other hand, text-based speech synthesis is used by systems to generate speech. Even in this speech synthesis, the ability to express paralanguage in detail has been developed. When specifying emotions like joy or anger, the speech synthesis system can adjust the tone, rhythm, and volume to match the emotional expression. The expressive capabilities of these systems are evolving daily.

Interactive robots and AI agents (avatars) are becoming more common, and there is a growing need for them to engage in natural conversations with humans. Robots may also start responding with emotions. We have already entered an era where avatars generated by generative AI can speak emotionally. In such situations, how do people perceive the emotions of these robots or avatars? Is it still through facial expressions, movements, and voice, just like with humans? If so, I imagine that cultural differences, as discussed earlier, need to be considered in dialogues.

Conclusion

It has become apparent in recent years that the elements used by individuals to estimate (perceive) emotions are not universal but vary across cultures. I am conducting research that takes these characteristics into account in dialogue expressions with robots and avatars. While the experiment results show that Japanese lean towards audio dominance, another study suggests that within the Japanese population, adults (university students and above) tend towards audio dominance, while younger individuals lean towards facial expression dominance. It is also said that emotional expressions are not universal but vary across cultures. Since there are differences based on culture, if you are interested, I recommend looking into further information.

If you want to better understand and experience emotions, I recommend the board game The Game of Saying “Huh” published by Gentosha. In this game, players express short words like “Ha,” “Wow,” “Huh,” and “Like” using only their voice and facial expressions to guess the word. Even without acting training, you can express emotions through your voice alone, using tone, volume, and speaking speed, and I was surprised that you could guess them. Some words were challenging to differentiate. If you’ve become interested in emotional perception, why not give this game a try?